Automated download/import of community statistics

-

Is there a way to access engagement stats for a community to make a (daily) time series? Something along the lines of exporting data ever 24 hours at 2:00 UTC.

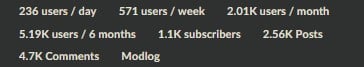

This is the data I am referring to:

Perhaps an API of some sort. A formal method that goes beyond writing a scrapper.

-

Is there a way to access engagement stats for a community to make a (daily) time series? Something along the lines of exporting data ever 24 hours at 2:00 UTC.

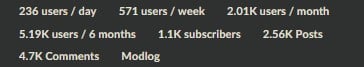

This is the data I am referring to:

Perhaps an API of some sort. A formal method that goes beyond writing a scrapper.

IDK if this counts as "writing a scraper," but from

tmuxyou could:echo '"Daily Users","Weekly Users","Monthly Users","6-Month Users","Community ID","Subscribers","Posts","Comments"' > stats.csv while true; do curl -H "Authorization: Bearer YOUR_JWT_TOKEN" "https://YOUR_INSTANCE/api/v3/community?name=COMMUNITY_NAME" \ | jq -r '[.community_view.counts | (.users_active_day, .users_active_week, .users_active_month, .users_active_half_year, .community_id, .subscribers, .posts, .comments)] | @csv' >> stats.csv sleep 24h doneGet your JWT token by opening up web developer tools in your browser and looking at one of its requests.

-

IDK if this counts as "writing a scraper," but from

tmuxyou could:echo '"Daily Users","Weekly Users","Monthly Users","6-Month Users","Community ID","Subscribers","Posts","Comments"' > stats.csv while true; do curl -H "Authorization: Bearer YOUR_JWT_TOKEN" "https://YOUR_INSTANCE/api/v3/community?name=COMMUNITY_NAME" \ | jq -r '[.community_view.counts | (.users_active_day, .users_active_week, .users_active_month, .users_active_half_year, .community_id, .subscribers, .posts, .comments)] | @csv' >> stats.csv sleep 24h doneGet your JWT token by opening up web developer tools in your browser and looking at one of its requests.

That request doesnt need any auth.